Assessment is a Process, Not a Product

By Elizabeth Bledsoe Piwonka, Associate Director of Assessment

Texas A&M University Office of Effectiveness and Evaluation

“And don’t forget to do your assessment – we don’t want any of our programs to be out of compliance!”

This sentence makes me cringe. This is the sentence said at the end of large meetings, as people are gathering their belongings (when we used to meet face to face) and begin their side conversations before walking out the door. This is the sentence that reminds faculty and staff that Big Brother is watching them and if they do not check the box they will probably get a nasty email from some administrator somewhere telling them they will be the reason the institution loses its accreditation status if they don’t get something in by the end of the week. And then once the forgotten, thrown together report is submitted, it will come back six months later covered in red, indicating it failed to meet some standard that was posted somewhere. There was probably an email about it at some point.

This used to be the assessment culture on my campus. Annual assessment reports were submitted once a year and, for the first few years of this practice, no one read them at all. Then, after the accreditor had some words to say about the quality of a submission, assessment professionals read them all. Every. Single. Report. On a positive note, programs received feedback for the first time and had information to work off of from their own program rather than a “Sample Program, BA” with some generic “Students will communicate effectively” outcome like the examples on the website. So, documentation of assessment efforts would improve, right?

Now, that faculty, department heads, deans, and the provost were observing a failing report card on assessment practices, the message changed.

“These better have improved for next time!”

It’s really too bad that the deadline to submit these is in three months…and that the spring semester ends next week, so there aren’t really any faculty around to do anything about it…but here, academic advisor or faculty committee-of-one, fix this.”

This went on for more years than I care to recall. I knew the implementation of AEFIS on our campus meant we would be able to connect program assessment reporting to other data sources in ways we had not been able to before, and maybe drum up a bit more enthusiasm as people realized the potential in such a process. What I severely underestimated, though, was the extent to which the new software, and the framework it allowed us to set up, would shift the conversation.

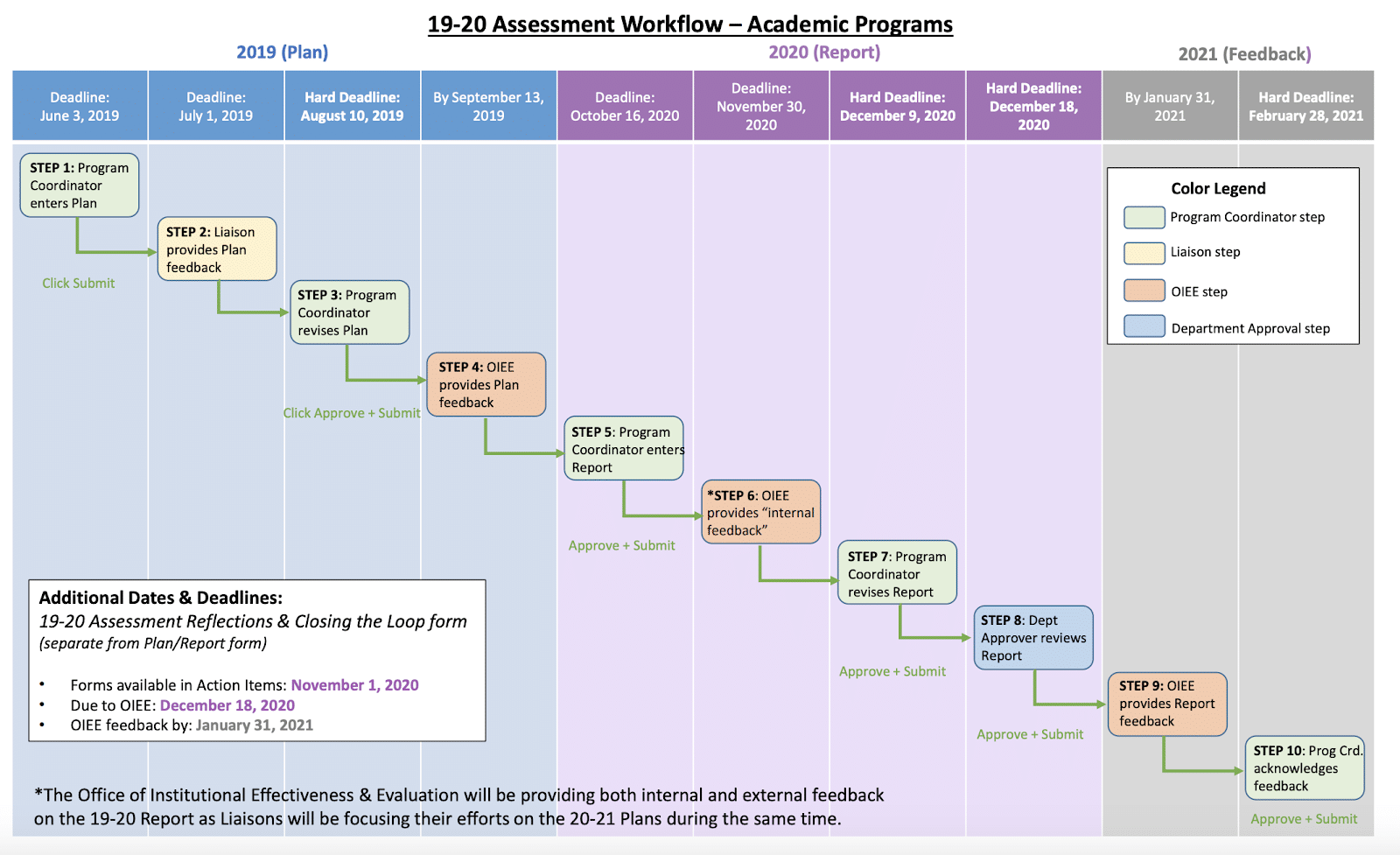

We use two separate data collection forms, one with a 10-step workflow and another with a 3-step, to facilitate program assessment. On the outset, it sounds crazy, complicated, and burdensome. However, with workflow steps, using soft and hard deadlines across nearly 24 months, has completely shifted the conversation about assessment from completing a last-minute report to a conversation. From a product to a process.

“What should we commit to measuring, and how should we do that?”

“Did students meet the desired level of performance? What does it mean if they did? If they didn’t?”

“How can we make sure they do next time?”

You know, the assessment cycle.

The assessment workflow occurs in three phases: the Assessment Plan, the Assessment Report, and Assessment Reflections and Closing the Loop. Take the link here to review the workflow. (http://assessment.tamu.edu/assessment/media/Assessment-Resources/19-20-Workflow-Academic-Programs.pdf)

Since the codified assessment workflow, programs are prompted to plan ahead for their data collection methods and strategies during Phase I. They are asked to commit to a targeted level of performance and have these plans reviewed by college-specific assessment liaisons or professionals. In addition, they receive centralized feedback from the Office of Institutional Effectiveness & Evaluation, before any new learning outcome data are collected at all. Programs are able to see all of the feedback they’ve received in one single place, as they are reading or revising their submission, and use that feedback in real time to ensure the best possible results from their assessment exercises.

During Phase II the assessment results are collected, analyzed, and discussed in order to determine actions for continuous improvement. This occurs the fall semester after the academic year in question, allowing for thorough analysis of assessment results as well as the ability to engage faculty in the discussions about continuous improvement, even if they depart for the summer months.

Phase III refers specifically to the Assessment Reflections and Closing the Loop. This is a separate form from the 10-step workflow described above and is where programs are asked to discuss the implications of previously implemented actions in addition to reflecting on the overall assessment process. These forms are separate to encourage intentional conversations about the effectiveness of previously implemented actions and, while this form’s workflow is not as complex, the line of demarcation in its own way helps to facilitate clear separation between the actions the program is implementing and the actions from previous years. Using AEFIS has enabled our campus to change the way it talks about assessment, particularly because of our use of workflows. Stretching the assessment reporting cycle with incremental deadlines along the way has shifted the conversation about assessment from compliance to purpose, from theory to practice, and from product to process.

Discussion